To log backup or not to log backup

SQL Server has been around for decades, but I still come across companies that do not have dedicated DBAs and do not understand the concept of Log Backups, which often means they put their data at risk.

Recovery Objectives

Let’s start with the basics. The best way to pick the right backup strategy is to understand Recovery Time Objective (RTO) and Recovery Point Objective (RPO). These two are the most critical parameters in planning and designing a Disaster Recovery Strategy for SQL Server and any IT system.

RTO

The recovery time objective tells us how much time we have to recover from a disaster, from when the disaster starts to when the problem is completely resolved.

For example, If someone drops the production database and it takes two hours to restore it from backup, we would be looking at an RTO of 2 hours to restore the database plus any additional time required to figure out what has happened, find the backups, find the restore scripts and so on.

If you have an RTO requirement of 15 minutes and restoring the database from backup takes 2 hours, you need to think about ways to minimise the restore time.

There are ways to reduce recovery times which we will discuss in future posts.

RPO

The recovery point objective tells us how much data we are accepting to lose.

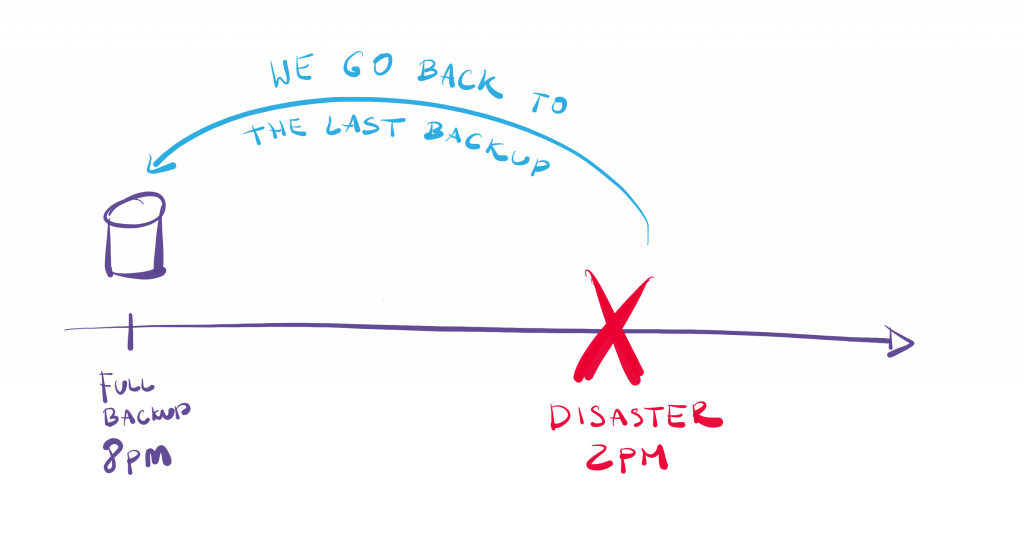

For example, If someone drops the production database at 2 pm and the last backup was at 8 pm the previous day, we are going to lose 18 hours worth of data. This is because any data that has been written since the last backup is now gone.

Since we already established it would take us 2 hours to backup or restore the database in question, there is no way we could be running backup every 5 minutes to satisfy your RPO requirement. In such a case, we need to look at different backup techniques.

SQL Server Transaction Log

To understand how backups work in SQL Server, we have to start with the Transaction Log.

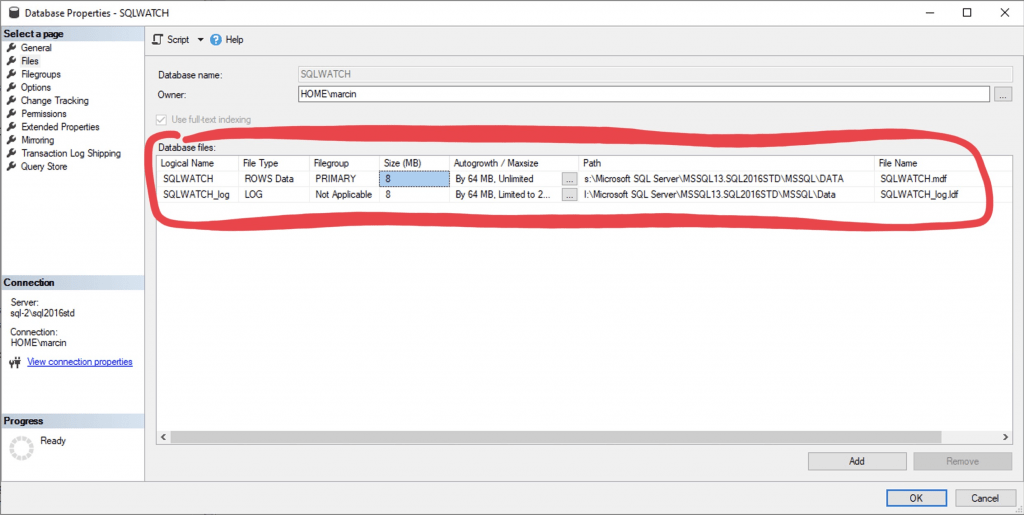

SQL Server Database consists of a minimum of two files: Main Database File (MDF), where our data is kept and the Log Database File (LDF) where the information about transactions is kept:

Relational databases have transactions to comply with ACID properties. When we write data into the SQL Server database, we create a transaction.

Explicit Transactions

Explicit transaction means that we BEGIN and COMMIT our own transaction:

BEGIN TRANSACTION

INSERT INTO dbo.users (Name, Surname)

VALUES ('Marcin','Gminski')

COMMIT TRANSACTIONThe benefit of using explicit transactions is that we can undo all the changes with the ROLLBACK command:

BEGIN TRANSACTION

INSERT INTO dbo.users (Name, Surname)

VALUES ('Marcin','Gminski')

ROLLBACK TRANSACTIONImplicit transactions

The SQL Server handles implicit transactions for us, and there is no way back unless the query fails, SQL Server will undo it for us to satisfy ACID properties:

INSERT INTO dbo.users (Name, Surname)

VALUES ('Marcin','Gminski')It is, however, always a transaction.

The purpose of the Transaction Log file

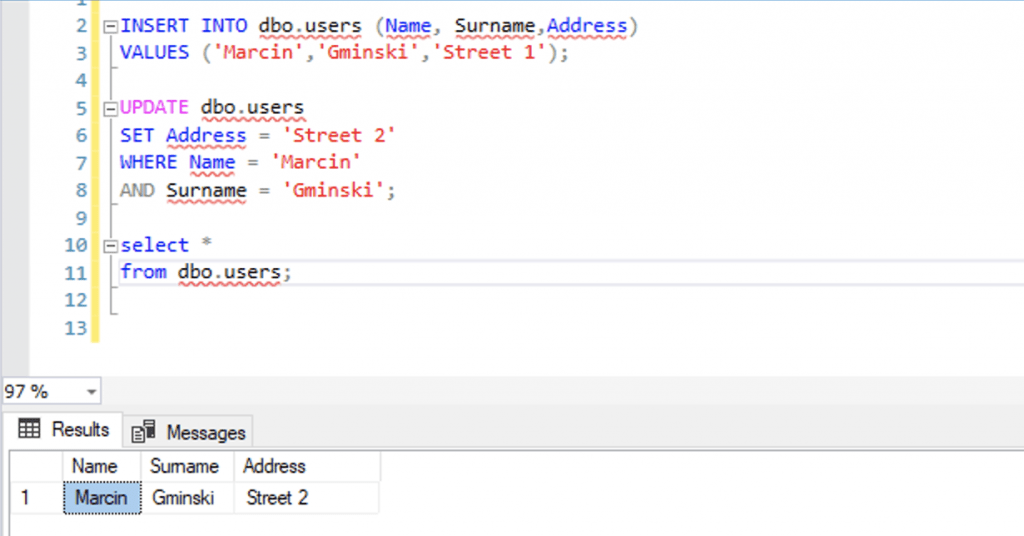

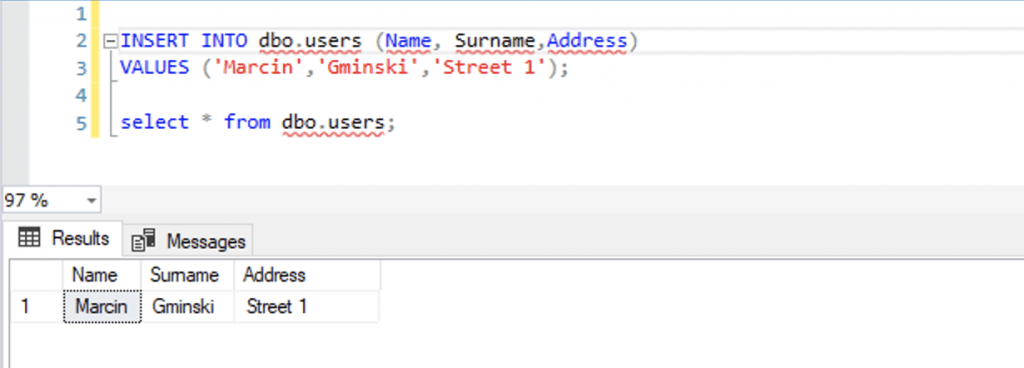

The transaction log is like a journal and contains information about all the changes (transactions) we have made in our database in chronological order. The data goes into the MDF and the information about it, the metadata goes into the LDF. This allows us to “re-run” all transactions and recreate the data in the MDF at a given point in time. Suppose we have run the following query:

INSERT INTO dbo.users (Name, Surname,Address)

VALUES ('Marcin','Gminski','Street 1');

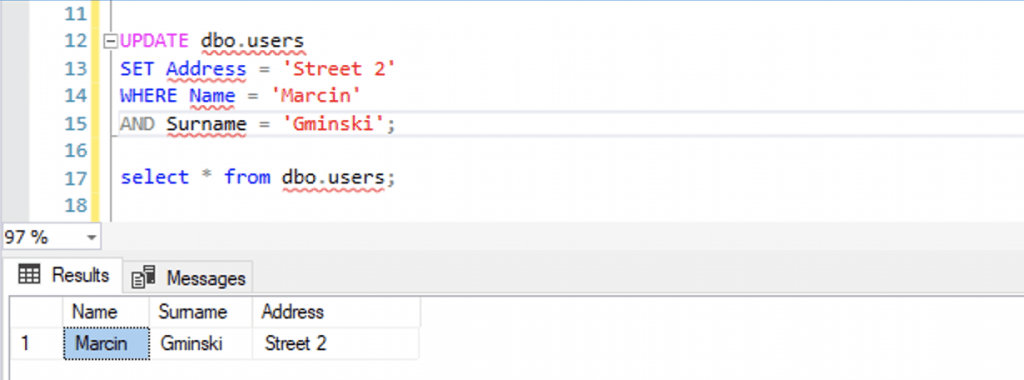

UPDATE dbo.users

SET Address = 'Street 2'

WHERE Name = 'Marcin'

AND Surname = 'Gminski';We have inserted a record into the database with values Marcin, Gminski, Street 1. We have then immediately updated this record and changed the Address to Street 2.

After the above operations, the “final” Address value in the Data File (MDF) is Street 2 but, the transaction log (LDF) would contain both operations. The original insert and then update (and all sorts of other information that is not easily readable by us, humans).

Well, actually, that’s not entirely true. What is kept in the Transaction Log File would depend on the recovery model of the database.

You can even run a query to see the content of the log file:

SELECT * FROM sys.fn_dblog(NULL,NULL)Database Recovery Models in SQL Server

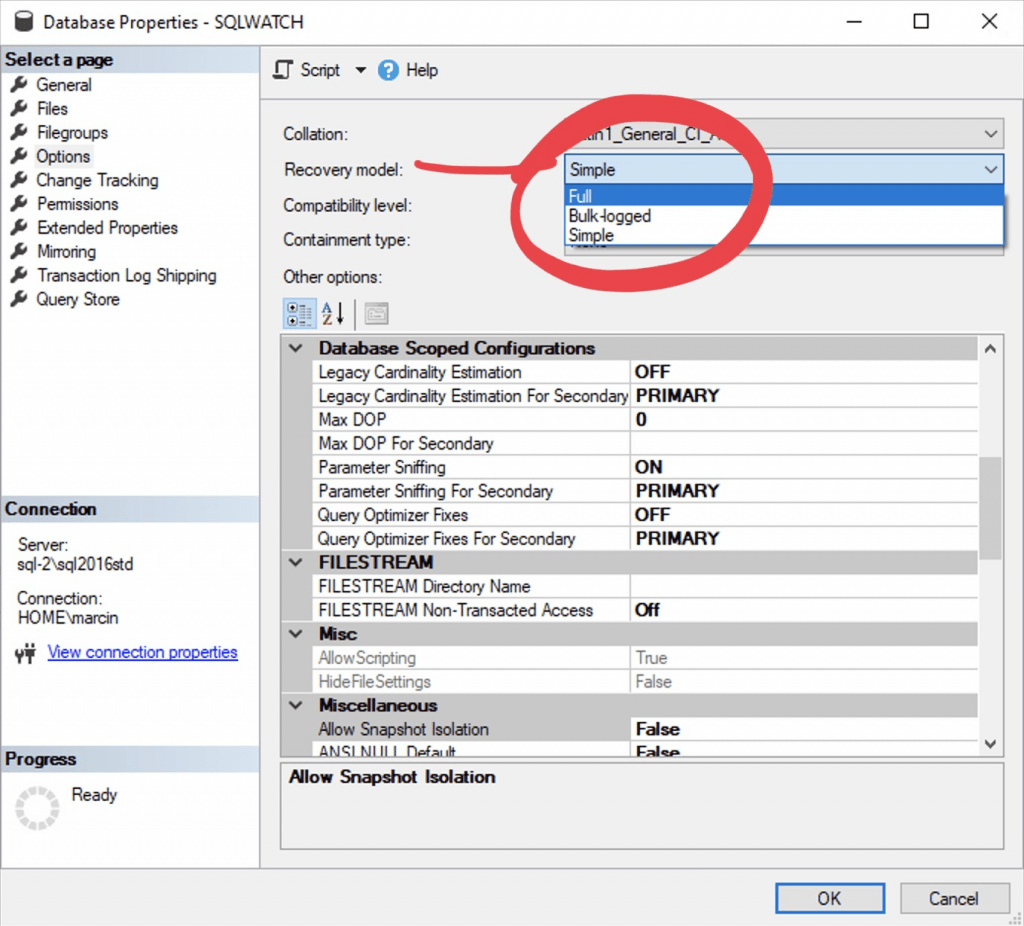

Simple

In the Simple recovery model, once the transaction is committed and data is safe in the Data File (MDF) the information contained in the Transaction Log (LDF) is discarded. This keeps the log file empty and relatively small but prevents us from being able to “recreate the history” or “go back in history”.

This option is good for databases with large RPO or where data can be reloaded from the source at any time – for example from flat files like in a Data Warehouse “Landing Zone”.

In this mode, we can only take Full or Differential Database backups. This usually happens once a day, at night. This means that the most recent recovery point will be the last database backup.

Full

In the Full recovery model, once the transaction is committed and data is safe in the Data File (MDF) the information contained in the Transaction Log (LDF) is retained. This keeps the log file full of information which can grow very large very quickly but it also allows us to recreate the history. But what can we do to keep it small? We back it up!

Backing up Transaction Log Files “moves” the information from the LDF into the backup file.

YOU MUST BACKUP the transaction log frequently to be able to recover information from it. You can ONLY recover from a Log BACKUP. It is NOT ENOUGH to “just have” the transaction log without any backups.

Since log backups contain only the information since the last log backup, they are relatively small but you need them all, in order to recover the database. Do not delete any log backups since the last full database backup.

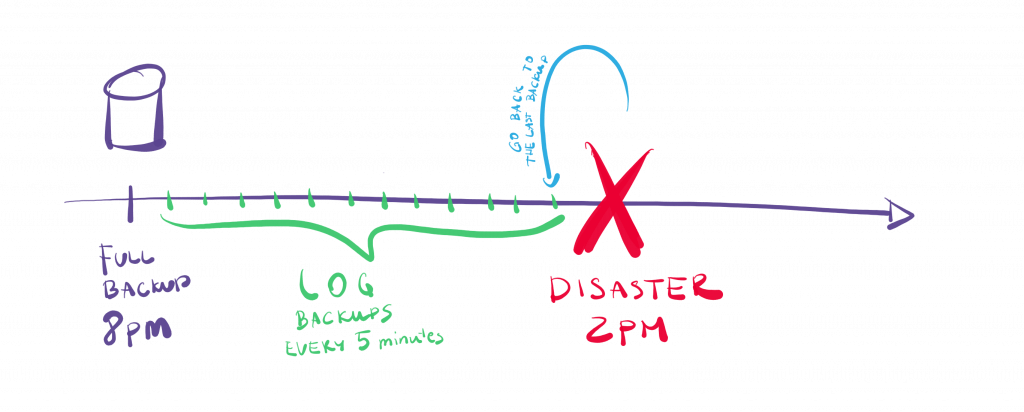

The recovery process would be to restore the nightly backup from 8 pm and then “roll forward” all the transaction log backups to the most recent one. Since there may be many backup files to restore, you best have this automated. The total recovery time may still be very long, and although you will satisfy your RPO and not lose a lot of data, you may still breach your RTO requirements because the recovery process takes too long.

This option is best for databases that require very low RPO. If your RPO is 1 minute, you must be backing up the transaction log at least once every minute.

Bulk Logged

This is like a Full recovery model but with the ability to switch to the Simple Recovery model and then back to Full. This is very handy if we have a very large operation that we do not want to go into the Transaction Log File (LDF) to save space and improve performance.

Performance impact

A common question: is there a performance difference between Simple and Full recovery models? No, there isn’t as far as database performance is concerned but since you will be running lots of log backups, there will be some overhead in CPU and IO. How much will depend on many factors, likely negligible but you will have to test it yourself.

Remember though, this isn’t about performance but about how much data you can afford to lose. Relational databases come with a performance overhead, such as ensuring ACID properties, managing referential integrity, constraints, indexes, statistics and so on. Frequent log backups will be one of them. If we didn’t need any of the benefits of the relational database we would have just kept all the data in a *.txt file, or NoSQL database.

Demo time

Ok, we have explained the basics and I can now present you with some actual facts and demos. Let’s go back to our previous example of INSERT followed by an UPDATE statement:

INSERT INTO dbo.users (Name, Surname,Address)

VALUES ('Marcin','Gminski','Street 1');

UPDATE dbo.users

SET Address = 'Street 2'

WHERE Name = 'Marcin'

AND Surname = 'Gminski';Let’s clean the table and start from scratch:

DELETE FROM dbo.users;Let’s now insert our data:

Before the UPDATE let’s take a log backup:

BACKUP LOG [LOGBACKUPTEST] TO DISK = 'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log1.bak'And run our update:

And take another log backup:

BACKUP LOG [LOGBACKUPTEST] TO DISK = 'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log2.bak'Ok, so at this point the Address value in the table dbo.users is showing Street 2. So how do we go back, so it shows Street 1. Well, we have to invoke the recovery process:

- Restore the database from the Database Backup.

- Restore the first transaction log — this contains the first value

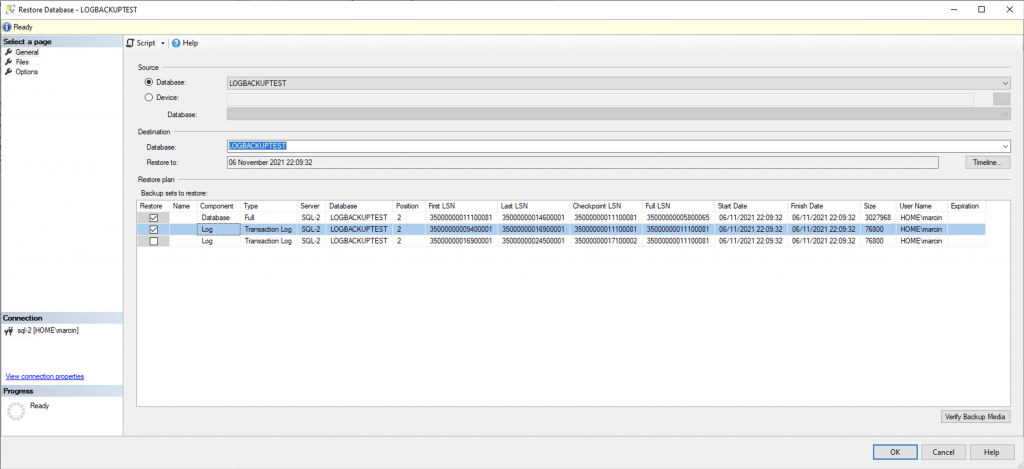

For simplicity, we can also do so via the SSMS GUI if we go to “Database” -> “Tasks” -> “Restore” -> “Database” to see all data (Full) backups and Transaction Log backups. We can then cherry-pick what to restore:

USE [master]

RESTORE DATABASE [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest.bak' WITH FILE = 1, NORECOVERY, REPLACE, NOUNLOAD, STATS = 5

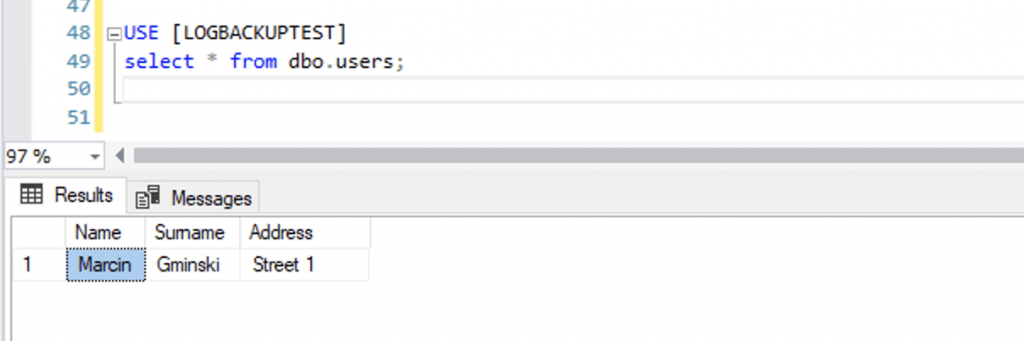

RESTORE LOG [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log1.bak' WITH FILE = 1, NOUNLOAD, STATS = 5Let’s see what’s in our table:

We now have the first value before we run our UPDATE.

Let’s now restore to the second log backup:

USE [master]

RESTORE DATABASE [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest.bak' WITH FILE = 1, NORECOVERY, REPLACE, NOUNLOAD, STATS = 5

RESTORE LOG [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log1.bak' WITH FILE = 1, NOUNLOAD, NORECOVERY, STATS = 5

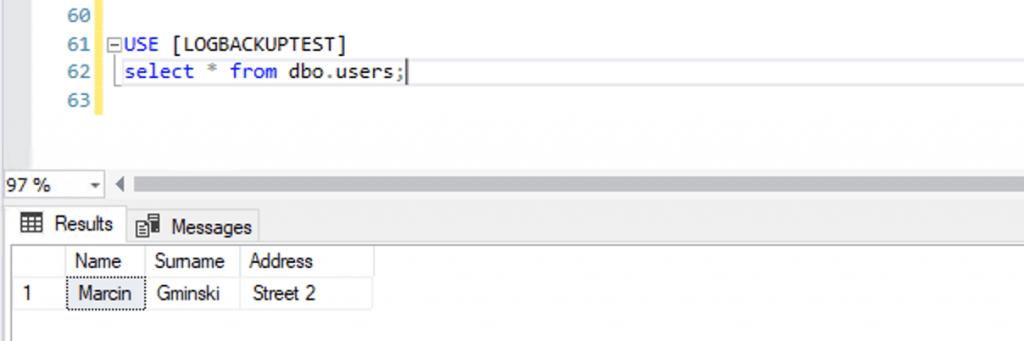

RESTORE LOG [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log2.bak' WITH FILE = 1, NOUNLOAD, STATS = 5

GOAnd the Address value has been “rolled forward” and now shows the value as modified by the UPDATE statement:

Yep. That’s how the recovery to a point in time works in SQL Server. You need those log backups done often!

Repeat after me:

I will be backing up the Transaction Log backup frequently in order to be able to restore to a point in time.

Complete script for you to play with:

USE [master];

-- Create test database:

CREATE DATABASE [LOGBACKUPTEST];

GO

-- Make sure we are running in Full Recovery model:

ALTER DATABASE [LOGBACKUPTEST] SET RECOVERY FULL WITH NO_WAIT;

GO

USE [LOGBACKUPTEST];

GO

-- Create test table:

CREATE TABLE dbo.users (

Name varchar(50),

Surname varchar(50),

Address varchar(100)

);

-- Create the first full baseline backup:

BACKUP DATABASE [LOGBACKUPTEST] TO DISK = 'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest.bak';

-- Insert first set of data:

INSERT INTO dbo.users (Name, Surname,Address)

VALUES ('Marcin','Gminski','Street 1');

select * from dbo.users;

-- Backup the transaction log:

BACKUP LOG LOGBACKUPTEST TO DISK = 'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log1.bak'

-- run update and change Address to Street 2:

UPDATE dbo.users

SET Address = 'Street 2'

WHERE Name = 'Marcin'

AND Surname = 'Gminski';

select * from dbo.users;

-- Take the second log backup:

BACKUP LOG LOGBACKUPTEST TO DISK = 'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log2.bak'

-- Restore to a second log backup:

USE [master]

RESTORE DATABASE [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest.bak' WITH FILE = 1, NORECOVERY, REPLACE, NOUNLOAD, STATS = 5

RESTORE LOG [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log1.bak' WITH FILE = 1, NOUNLOAD, STATS = 5

GO

USE [LOGBACKUPTEST]

select * from dbo.users;

-- Restore to the second log backup:

USE [master]

RESTORE DATABASE [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest.bak' WITH FILE = 1, NORECOVERY, REPLACE, NOUNLOAD, STATS = 5

RESTORE LOG [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log1.bak' WITH FILE = 1, NOUNLOAD, NORECOVERY, STATS = 5

RESTORE LOG [LOGBACKUPTEST] FROM DISK = N'R:\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\Logbackuptest_log2.bak' WITH FILE = 1, NOUNLOAD, STATS = 5

GO

USE [LOGBACKUPTEST]

select * from dbo.users;But my boss wants low RTO and RPO

So how do we deal with situations where we cannot lose more than 5 minutes worth of data, and we have to be back up from a disaster in 15 minutes.

This is most often done by implementing one or more secondary servers hosting the same database. All data from the primary server is being replicated in real-time to the secondary servers. One example of such a mechanism in SQL Server would be Availability Groups.

However, this is where you really have to understand the difference. Suppose you have one primary server and two secondary servers, and you sleep well knowing that there is no chance on earth for all three servers to break down simultaneously. And since you have synchronous replication enabled, you are sure that the data is consistently replicated on all three servers with no delay. If the primary server goes down, all of its data will be on the remaining servers.

You have synchronous replication, and all data is synchronised in real-time.

You have synchronous replication, and all data is synchronised in real-time.

You have synchronous replication, and ALL DATA is synchronised in real-time.

Do you see where the problem is?

If someone forgets the WHERE clause and runs a DELETE statement on the primary server, this will also remove the data from ALL replicas, in real-time, before you even realise.

The only way to then recover from such an event is to restore from a backup which may still get you in trouble for breaching RTO.

Remember that secondary replicas protect against hardware failures and not against data modification. It is critical to have change windows and test and review all changes going into production databases to avoid unwanted data modifications. Just don’t give anyone direct write access to the database you really care about. Only applications and interfaces allowed. No humans. No, seriously. This is where the DBA saying “No” is perfectly justified.

Anyway, summary

You can run the Simple Recovery Model and take one backup a day if you are either ready to lose all the data since the last backup, or you have a way of getting it back and you do not care about how long it will take.

You must run the Full Recovery Model if you care about your data and if you do, TAKE THE LOG BACKUP EVERY FEW MINUTES!

You can run the Bulk Logged Model if you are adventurous, or you can learn more about data platform by attending community events

In case you ask which recovery model should be the SQLWATCH database in – I would put it in simple unless you have a reason to have in full recovery model.

Are you sure you got your backups sorted, do you sleep well? Do you need help? Let me know.

Recent Posts

Want to learn about SQLWATCH?

Marcin Gminski2023-03-28T22:07:01+01:0028th March 2023|0 Comments

SQLWATCH 4.4

Marcin Gminski2022-10-06T15:32:56+01:006th October 2022|0 Comments

T-SQL Tuesday #147 – SQL Server Upgrade Strategies

Marcin Gminski2023-07-06T23:15:07+01:008th February 2022|0 Comments

Should we run SQL Server on the default port 1433

Marcin Gminski2022-03-25T12:40:05+00:007th February 2022|3 Comments

Happy New Year!

Marcin Gminski2022-01-01T18:28:43+00:001st January 2022|0 Comments

I am a Microsoft MVP!

Marcin Gminski2021-12-02T23:15:16+00:002nd December 2021|10 Comments